The Legislative Data Web Viewer

Publishing data in structured data form often brings many possibilities. For example, we can build a fully-featured web viewer for inquiry and hansard records. The application would make legislative data more accessible for users. We built a demo viewer to show some possibilities, based on the parsed data.

Backend

The project consists of many components. The backend makes the data accessible as a set of APIs. Then, we used django and django-rest-framework to build them. The legislative data is then stored to a PostgreSQL database through django-orm. So the first step is to replicate the schema into the ORM, as shown in the example below:

class Hansard(models.Model):

present = models.ManyToManyField(

Person, related_name="hansard_presents", related_query_name="hansard_present"

)

absent = models.ManyToManyField(

Person, related_name="hansard_absents", related_query_name="hansard_absent"

)

guest = models.ManyToManyField(

Person, related_name="hansard_guests", related_query_name="hansard_guest"

)

officer = models.ManyToManyField(

Person, related_name="hansard_officers", related_query_name="hansard_officer"

)

akn = models.TextField()

class Inquiry(models.Model):

is_oral = models.BooleanField()

inquirer = models.ForeignKey(

Person,

related_name="inquirers",

related_query_name="inquirer",

on_delete=models.PROTECT,

)

respondent = models.ForeignKey(

Person,

related_name="respondents",

related_query_name="respondent",

on_delete=models.PROTECT,

)

number = models.IntegerField()

title = models.CharField(null=True)

akn = models.TextField()

class Meta:

ordering = ["number"]

One of the notable models is the Hansard, where it can contain both question sessions, and speeches. We simplify the saving of both types by using a computed property named debate, as shown below.

@property

def debate(self) -> list[Speech | QuestionSession]:

return list(

sorted(

chain(

self.speeches.all(), # type: ignore

self.sessions.all(), # type: ignore

),

key=lambda item: item.idx,

)

)

The corresponding serializer for Hansard and debate field would then look like,

class HansardSerializer(FlexFieldsModelSerializer):

class Meta:

model = Hansard

fields = ["id"]

expandable_fields = {

"present": (PersonSerializer, {"many": True, "read_only": True}),

"absent": (PersonSerializer, {"many": True, "read_only": True}),

"guest": (PersonSerializer, {"many": True, "read_only": True}),

"debate": (DebateSerializer, {"many": True, "read_only": True}),

}

class DebateSerializer(serializers.BaseSerializer):

def to_representation(self, instance: Speech | QuestionSession) -> dict[Any, Any]:

return {

"type": type(instance).__name__,

"value": (

SpeechSerializer(instance, many=False, read_only=True)

if isinstance(instance, Speech)

else QuestionSessionSerializer(instance, many=False, read_only=True)

).data,

}

Then we implement API endpoints through rest-framework viewset, as follows

class InquiryViewSet(ReadOnlyModelViewSet):

queryset = Inquiry.objects.all()

serializer_class = InquirySerializer

class HansardViewSet(ReadOnlyModelViewSet):

queryset = Hansard.objects.all()

serializer_class = HansardSerializer

Lastly, we import the data through a management command, as shown in the documentation.

Frontend

The frontend is built using ReactJS. The web viewer is a simple application, so the implementation is straightforward. We used react-router-dom's BrowserRouter to handle routing. API calls are also managed there, as each view only requires an API call.

const router = createBrowserRouter([

{

path: "/",

element: <Root />,

children: [

{

path: "hansard",

element: <HansardList />,

loader: async ({ request, param }) => fetch("/api/hansard.json"),

},

{

path: "hansard/:hansardId",

element: <Hansard />,

loader: async ({ request, params }) =>

fetch(

"/api/hansard/".concat(

params.hansardId || "",

".json?",

new URLSearchParams({ expand: "~all" }).toString(),

),

),

},

…

]);

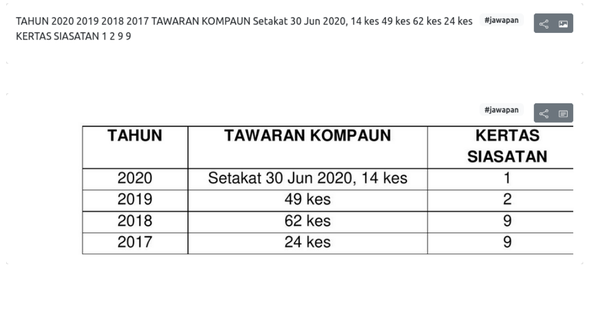

We then use react-toolkit to manage states. In our application, sometimes a table has both text and image representation. Users can then click on a toggle button to switch between the two.

Sharing is also made easier through a button. Users can then click on it and copy the link to any snippets.

The application uses Bootstrap for the general look and feel. For our simple proof-of-concept application, it works well.

Search

The application offers search through OpenSearch. On the backend side, we implemented it through the use of django-opensearch-dsl. Since the application doesn't require much configuration yet, the structure of the documents stored in OpenSearch looks like this:

@registry.register_document

class InquiryContentDocument(Document):

inquirer = fields.ObjectField(

properties={"name": fields.TextField(), "raw": fields.TextField()}

)

inquiry = fields.ObjectField(

properties={

"title": fields.TextField(),

"id": fields.IntegerField(),

"number": fields.IntegerField(),

"is_oral": fields.BooleanField(),

}

)

class Index:

name = "inquiry"

class Django:

model = InquiryContent

fields = ["id", "value"]

The corresponding serializer and view would look like this

class InquiryContentSearchSerializer(ContentElementSearchSerializer):

parent_type = "inquiry"

document_type = "inquiry"

@api_view(["GET"])

def search(request: Request, format=None) -> Response:

result = None

data = SearchData(

**{key: request.query_params.get(key) for key in request.query_params.keys()}

)

if not data.query:

return Response("Bad search request", status=status.HTTP_400_BAD_REQUEST)

match data.document_type:

case "inquiry":

hits = (

InquiryContentDocument.search()

.query(

"multi_match",

query=data.query,

fields=["value", "inquirer.raw"],

)

.highlight("value")

)

serializer = InquiryContentSearchSerializer(hits, many=True)

result = Response(serializer.data)

...

case None | _:

result = Response("Bad search request", status=status.HTTP_400_BAD_REQUEST)

return result

Serving the application

We use gunicorn to serve the application backend, as most django applications. On the frontend side, vite first build the final application. Caddy then serves the built website, and forward all the application to gunicorn.

:8080 {

encode zstd gzip

root * /data

file_server

reverse_proxy /api backend:8000

reverse_proxy /api/* backend:8000

}

Container

Deployment is hard, especially when we want to ensure a consistent execution environment. Shipping applications in containers is one of the solutions. Building the frontend and backend is a trivial task. The Dockerfiles are straightforward and self-explanatory.

The DBMS, which is PostgreSQL, does not need much configuration. OpenSearch though, require some configurations. We created a script to generate certificates for the server configurations. Then we generate an internal-users.yaml file for the admin user. As the file contains passwords, we exclude the file from the code repository.

A sample docker compose file would look like follows:

services:

database:

image: "postgres:16"

env_file:

- .env.docker

networks:

- legisdata

frontend:

build:

context: .

dockerfile: podman/frontend/Dockerfile

name: legisdata_frontend

no_cache: true

pull: true

ports:

- 0.0.0.0:8080:8080

env_file:

- .env.docker

networks:

- legisdata

backend:

build:

context: .

dockerfile: podman/backend/Dockerfile

name: legisdata_backend

no_cache: true

pull: true

volumes:

- ./certificates/root/root-ca.pem:/app/root-ca.pem

- ./certificates/admin/admin.pem:/app/admin.pem

- ./certificates/admin/admin-key.pem:/app/admin-key.pem

- ./certificates/node/node.pem:/app/node.pem

- ./certificates/node/node-key.pem:/app/node-key.pem

env_file:

- .env.docker

networks:

- legisdata

search-node:

image: opensearchproject/opensearch:latest

env_file:

- .env.docker

environment:

- node.name=search-node

ulimits:

memlock:

soft: -1 # Set memlock to unlimited (no soft or hard limit)

hard: -1

nofile:

soft: 65536 # Maximum number of open files for the opensearch user - set to at least 65536

hard: 65536

volumes:

- search-data:/usr/share/opensearch/data

- ./podman/opensearch/usr/share/opensearch/config/opensearch-dev.yml:/usr/share/opensearch/config/opensearch.yml

- ./podman/opensearch/usr/share/opensearch/config/opensearch-security/internal_users.yml:/usr/share/opensearch/config/opensearch-security/internal_users.yml

- ./certificates/root/root-ca.pem:/usr/share/opensearch/config/root-ca.pem

- ./certificates/admin/admin.pem:/usr/share/opensearch/config/admin.pem

- ./certificates/admin/admin-key.pem:/usr/share/opensearch/config/admin-key.pem

- ./certificates/node/node.pem:/usr/share/opensearch/config/node.pem

- ./certificates/node/node-key.pem:/usr/share/opensearch/config/node-key.pem

ports:

- 9200:9200

- 9600:9600

networks:

- legisdata

volumes:

search-data:

networks:

legisdata:

name: legisdata

Closing thoughts

The website is a proof-of-concept, though it shows what is possible when data is available. With a more accessible interface, it could ease data navigation and discovery. As the data is also published as a set of API, it is also possible to integrate into other applications. This further extends the possibilities and helpfulness.